🇸🇬 Can you train a LLM to speak Singlish in less than a day?

Singlish, also known as Colloquial Singaporean English, where I am from is what all Singaporeans would say define us as one.

I can certify that ChatGPT can already speak Singlish but I wondered how long does it take to train an LLM to speak like us?

In 🚀 Day 2 of my Turning My Curiosity into Code Until I Land a Tech Job series, I shipped a little “Talk Like Me” experiment, feeding 23,989 WhatsApp replies between my mom and I (111k words, 6.4k unique tokens) into TinyLlama, slapped on LoRA + 4-bit quantization, and hit train on my Mac.

🧪 The Plan

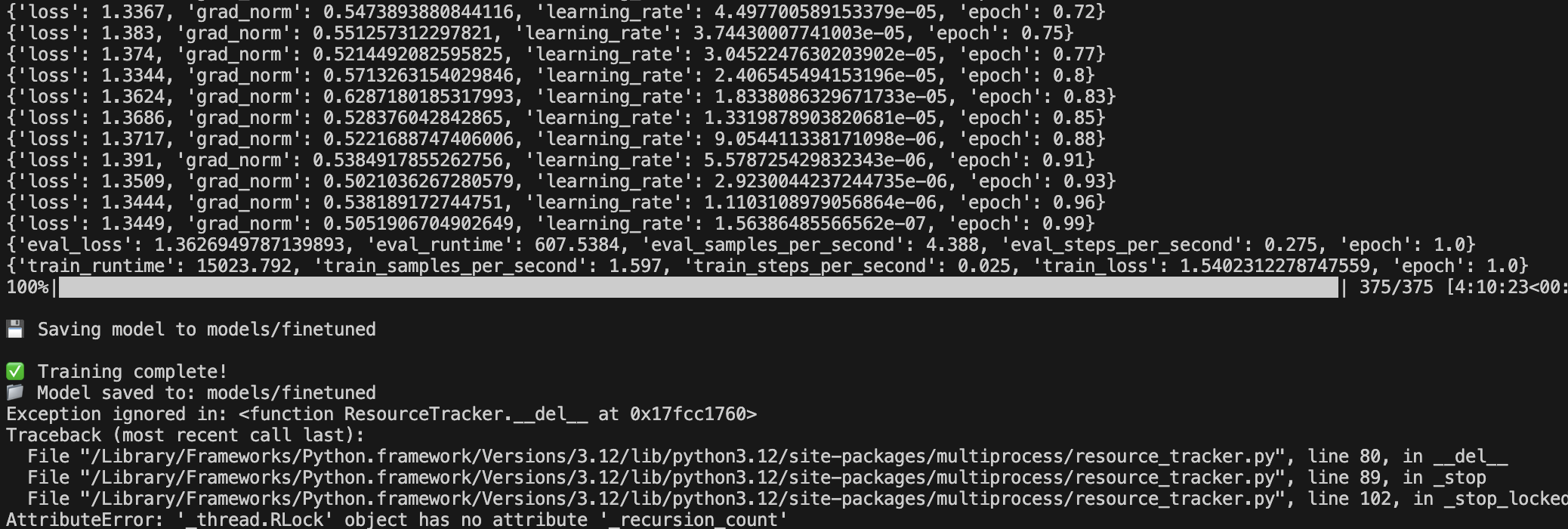

Since I only had less than a day, I picked my tools and got straight to training:

- Epoch: 1

- Batch size: 16

- Learning rate: 2e-4

- Max length: 256

Six hours of training later, the logs looked solid.

Our train loss 1.54 → eval loss 1.36, BUT the voice wasn’t me.

It wasn’t even sounding rational.

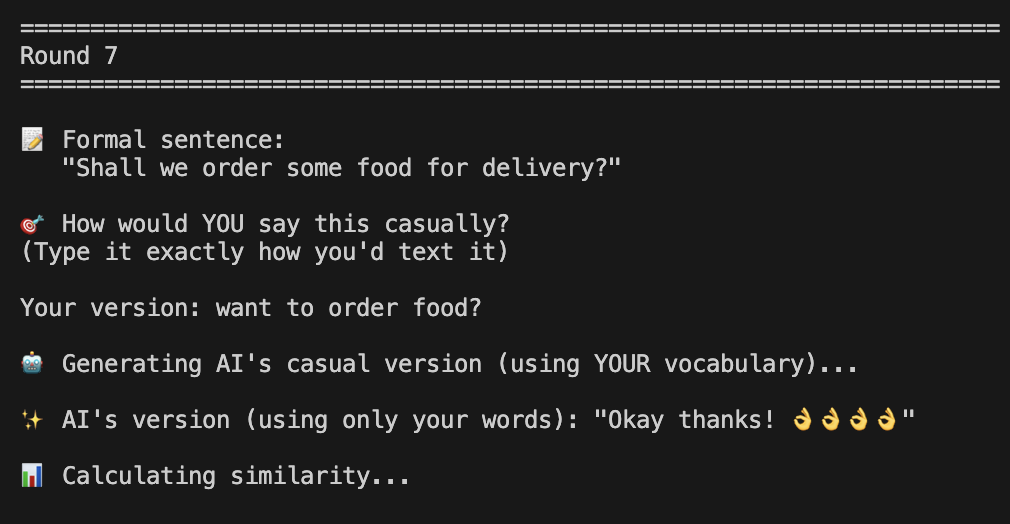

When your clone predicts that you would say

“Okay thanks!!! 👌👌👌👌”

when you actually want to convey

“Want to order food?”,

you know you’ve got work to do. 😅

Though sometimes, the response is reasonable.

When prompted with “That sounds acceptable to me”, my typical Singlish response would be “ok can”.

But the trained model predicted “Ok ah? Ok lah but better check”.

As a Singaporean, it’s a pretty solid response.

Overall, I would give the trained model a 4/10.

💡 Self Reflection:

-

Speed: a single epoch combined with big batches on CPU meant the model barely absorbed my cadence. More training and context is needed.

-

Context: truncating to 256 tokens chopped off lead-ins that carry tone. Furthermore, in Singlish context, a single “ah” expression in different form can mean differently.

(See: https://www.youtube.com/watch?v=1NncLpn-fuU) -

Vocabulary constraints: without my extracted lexicon, it wandered into words I never use. But if I removed these constraints, it still hallucinated quite abit.

🔁 If I Had More Time:

I would set it like this:

- Epoch: 1 → 5

- Batch size: 16 → 8

- Learning rate: 2e-4 (remain, drop if next run is not sig. improved)

- Max length: 256 → 512

Perhaps in another day of Turning My Curiosity into Code Until I Land a Tech Job series?

💼 I’m currently looking for software engineering roles (backend, full-stack, or automation-focused), based in Seattle, open to relocation within the United States.

🤝 Want a demo or to jam on similar ideas? Let’s talk!

🔗 LinkedIn: https://www.linkedin.com/in/sinclair-lim/

🔗 X: @sinclairlimzy

🔗 Portfolio: https://www.sinclairlim.com/

🔗 View the project on GitHub: https://github.com/sinclairlim/Day-3---LLM—talk-like-me-/blob/main/README.md