💻 We Need a Better LeetCode

day-6-the-better-leetcode.vercel.app

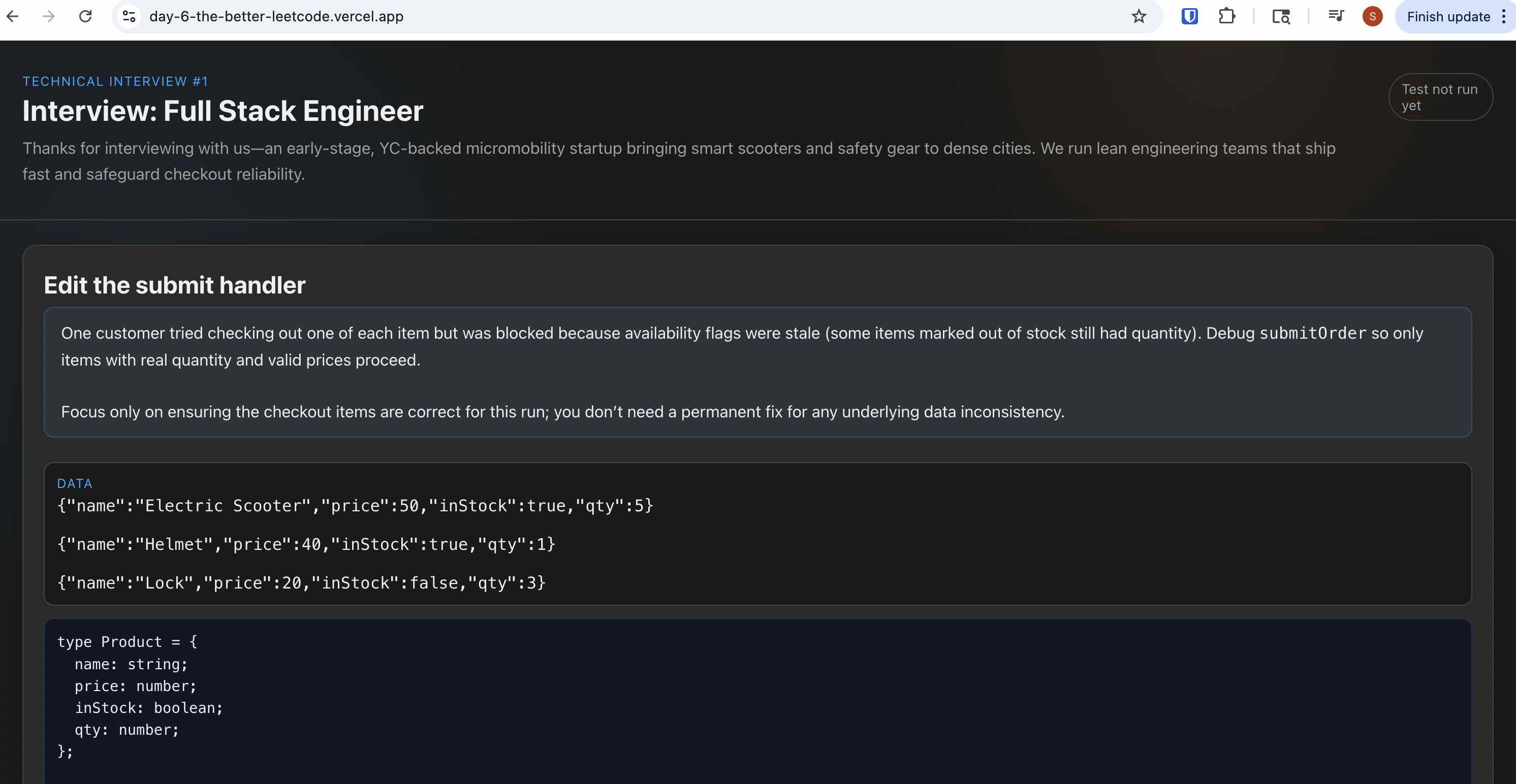

(Do give it a shot, and see if you can find the bug.)

In today’s world — especially in startups — we have to ship well and ship FAST.

Why make job applicants solve puzzles they can memorize with practice?

Instead, hand them a real production issue and see how quickly and safely they can ship a fix.

In 🚀 Day 6 of my Turning My Curiosity into Code Until I Land a Tech Job series, I built “Better LeetCode,” a break+fix playground where candidates drop into live scenarios with company context (in this case, like a broken checkout) and race from alert to patch.

In this case, I was interviewing for an online wholesaler, so I decided to craft this in the context of one.

Spot the break, build the patch, and validate what really matters: capability under pressure.

Dear CTOs/hiring managers/candidates, let me know if you think this is the way forward for new-age technical interviews! I would love to hear your comments and include more features!

🧪 The Plan

Stack: React, Node/Express

Idea: Have the candidate understand the overall code structure, find out why it’s failing, and try to fix it

Speed aids: Pre-seeded logs, feature flags, and one-click test runner (but this comes with its drawbacks, which I will mention below)

After two sprints, I could drop in a “checkout fails on promo” incident and time myself from first log to verified fix.

💡 Self Reflection

Feedback loops win: Immediate tests and logs cut time-to-recover more than adding more challenges. BUT currently the checks are instant through various guardrails, but this is not foolproof. With a small budget, implementing an LLM API to check whether the real issue has been resolved would be a better option.

Realistic + Practical > Clever: Fixing production bugs reveals habits (logs, hypotheses, rollbacks) that puzzles never show. It opens up candidates’ “bridge” between front-end and back-end.

Hints available: Light prompts kept momentum for nervous candidates without handing over the answer.

🔁 If I Had More Time

- Add more types of questions, contextualized to a company’s daily operations (using LLMs)

- Add different language compatibility (Python, Go)

- Have more metrics assessment: time-to-first-signal, rollback vs. hotfix rates

- Generate smarter scenarios that mutate bugs (null data, rate limits, schema drift) to avoid memorization by candidates

I’m currently looking for software engineering roles (backend, full-stack, or automation-focused), based in Seattle — open to relocation within the United States.

🤝 Want a demo or to jam on similar ideas? Let’s talk!

🔗 LinkedIn: https://www.linkedin.com/in/sinclair-lim/

🔗 X: @sinclairlimzy

🔗 Portf